Consider the Amount of Data Generated, Now Consider the Carbon Impact

Have you ever heard the term Zettabyte? If you work in the world of data, the cloud, and hyperscalers, you will know that a Zettabyte comes after Exabyte, Petabyte, Terabyte, and Gigabyte. For most of us, though, it’s a far-out concept that has little bearing on our day-to-day life. Let me tell you why you should care.

A Zettabyte is the next level of measurement for the volume of data that is generated by all the things we do. You know, all those text messages and emails and photos that we generate each and every day. Plus all of the data generated by all the companies, research institutes, and governments to help us more easily navigate the world. With the explosion of the Internet of Things (IOT), the amount of data generated has expanded exponentially.

We all know the concept of the Megabyte (MB). One thousand MBs make up a Gigabyte (GB), and 1,000 GB’s make up a Terabyte (TB), and so on up the line. What does that mean in real things? Roy Williams (https://labcit.ligo.caltech.edu/~rwilliam/) gives us some examples.

Examples of what data volumes represent in the real world.

As I learned while attending the Fujifilm Global IT Executive Summit in San Diego, since about 2016, we have entered the Zettabyte (ZB) era. According to research presented by Fred Moore (https://horison.com) the amount of data generated every year is staggering. Consider that 1 ZB represents 7.2 trillion MP3 songs, or all the data from 57.5 billion 32GB iPads, or 125 years of 1-hour television shows. Researchers predict that by 2025, just 3 years from now, the amount of data generated will grow to 175 ZB.

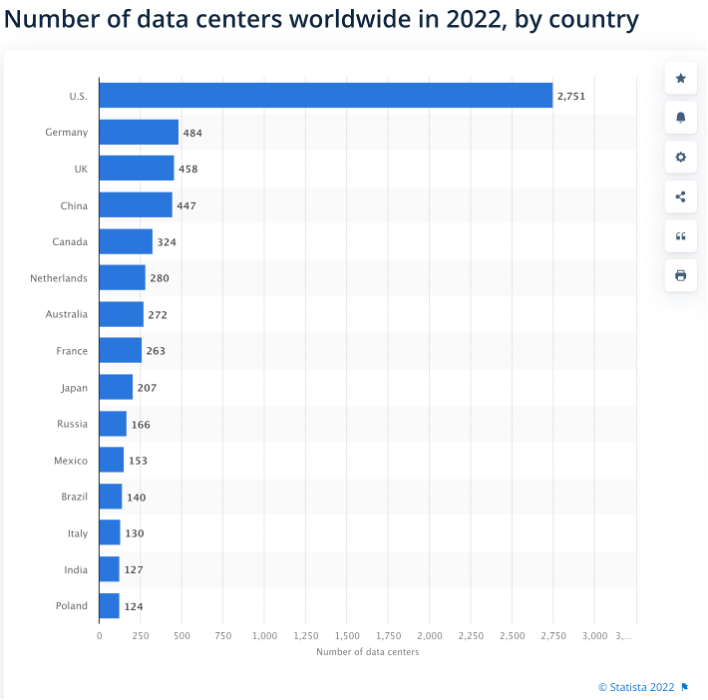

With all of this data being generated, there is a need for bigger data centers who operate on a state-of-the-art computing methodology. A hyperscaler is a data center that uses agile methods of computing in order to seamlessly scale up or down as necessary. These data centers are often working as Infrastructure as a Service (IaaS) to help companies with extremely large volumes of data. Hyperscalers are a critical component to the cloud and are growing at a rapid pace.

All of this costs energy and has a carbon impact. As new data centers come online, they are being built with sustainability in mind. Meta’s recent announcement for its Temple, Texas, facility (https://www.prnewswire.com/news-releases/meta-to-open-hyperscale-data-center-in-temple-texas-301514530.html) will be 100% renewable energy powered. That is good news.

But even more importantly is how to manage the increasing demand of all the data generated. Taking steps to streamline processes, use best-practice technology and standards, upgrade to greater efficiency are all ways to improve the carbon footprint of the hyperscaler.

Consider data centers as an integral component of our modern infrastructure. We can’t imagine living in a world without the internet and all its derivative technologies that makes our lives easier. But with that equation comes the notion that the Zetabytes of data need to go somewhere to be managed. And to do it sustainably.

Thanks to Rich Gadomski from Fujifilm for inviting me to attend the Fujifilm Summit. I learned a lot about data and the value of solutions, including tape, that support and enable sustainable data management.